In today’s multi-device world, deploying object detection models seamlessly across platforms—whether on the web, mobile, or edge devices—is critical. However, many users simply want to experiment and learn how to build custom models without the complexity of setting up extensive APIs. In this article, we present an end-to-end solution that automates the entire process—from image annotation to model training and export for various deployment targets—empowering both professionals and beginners to quickly create, test, and even run models directly on their devices without relying on external APIs.

Table of Contents

Motivation

Creating custom object detection models can often feel overwhelming, especially when transitioning from annotated images to a deployable model. The process typically involves multiple tools, each with its own learning curve and configuration requirements. This can discourage people who simply want to explore computer vision or test a small idea.

To lower the barrier to entry, this project brings together proven tools—like Label Studio, YOLO, and TensorFlow—and connects them in a way that minimizes manual steps. It enables users to go from labeling images to training a YOLO model and exporting it in formats that can run directly on the web or mobile devices.

Whether the goal is to build a real-world application or just explore what’s possible, this approach offers a lightweight and flexible way to experiment with object detection across platforms.

Tools That Power the Process

The workflow is built by combining three well-established tools, each playing a role in the process—from annotating images to deploying object detection models across different platforms.

🖍 Label Studio

A powerful, open-source data labeling tool that makes it easy to annotate images. With support for bounding boxes and customizable interfaces, it’s ideal for preparing the ground truth data needed for object detection training. It also supports exporting directly in YOLO-compatible formats, which saves time during dataset preparation.

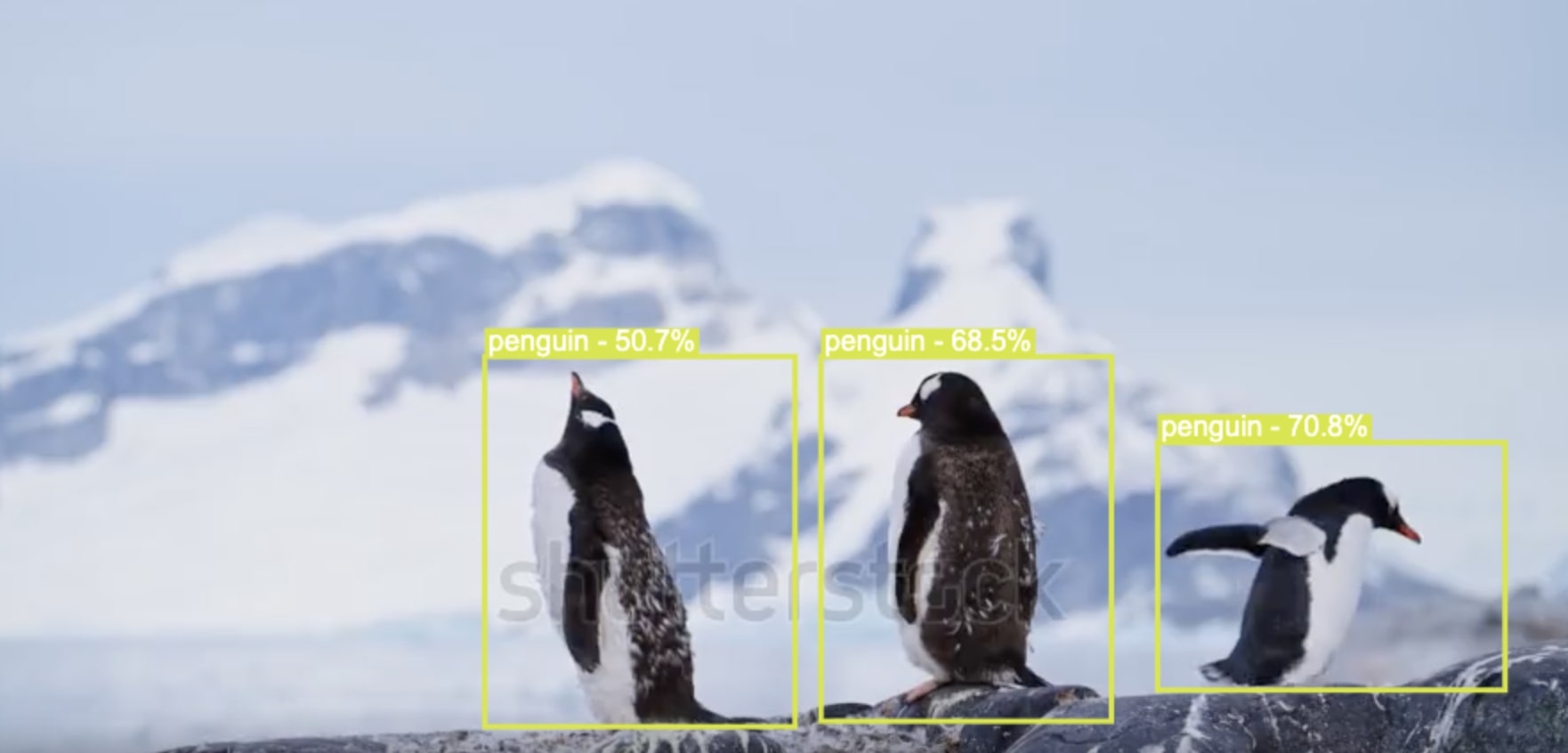

🚀 YOLO (You Only Look Once)

A state-of-the-art, real-time object detection system. In this project, YOLOv8 is used to train custom models based on the images and annotations provided. It’s fast, accurate, and widely adopted across the machine learning community. YOLO is at the core of the training phase and outputs a .pt model that can later be converted for different platforms.

📦 TensorFlow

Once the YOLO model is trained, it’s converted into TensorFlow formats:

TensorFlow.js allows running the model directly in the browser with no server-side calls, making it perfect for web deployment.

TensorFlow Lite enables the model to run efficiently on Android and iOS devices, even offline.

These export formats make it possible to deploy and run the model entirely on the device—whether that’s a browser or smartphone—without depending on external APIs or cloud services.

What’s Custom-YOLO-Everywhere and How to Use It?

Custom-YOLO-Everywhere tool guides users from raw annotations in Label Studio to a trained YOLO model, and finally to an exported TensorFlow-compatible format—ideal for running on the web or mobile devices.

The project helps you:

- 📦 Prepare datasets

- ⚙ Train YOLOv8 models

- 🚀 Export to TensorFlow.js or TensorFlow Lite

- 💡 Run the model directly on-device without needing cloud APIs

How It Works (Step-by-Step):

After clone the project that you can find in github, you only need to follow the next steps.

Start the Environment

- First is necessary Launch the Docker containers using the provided docker-compose.yml.

docker-compose up --build -d- Label Studio will run locally and be accessible at http://localhost:8080.

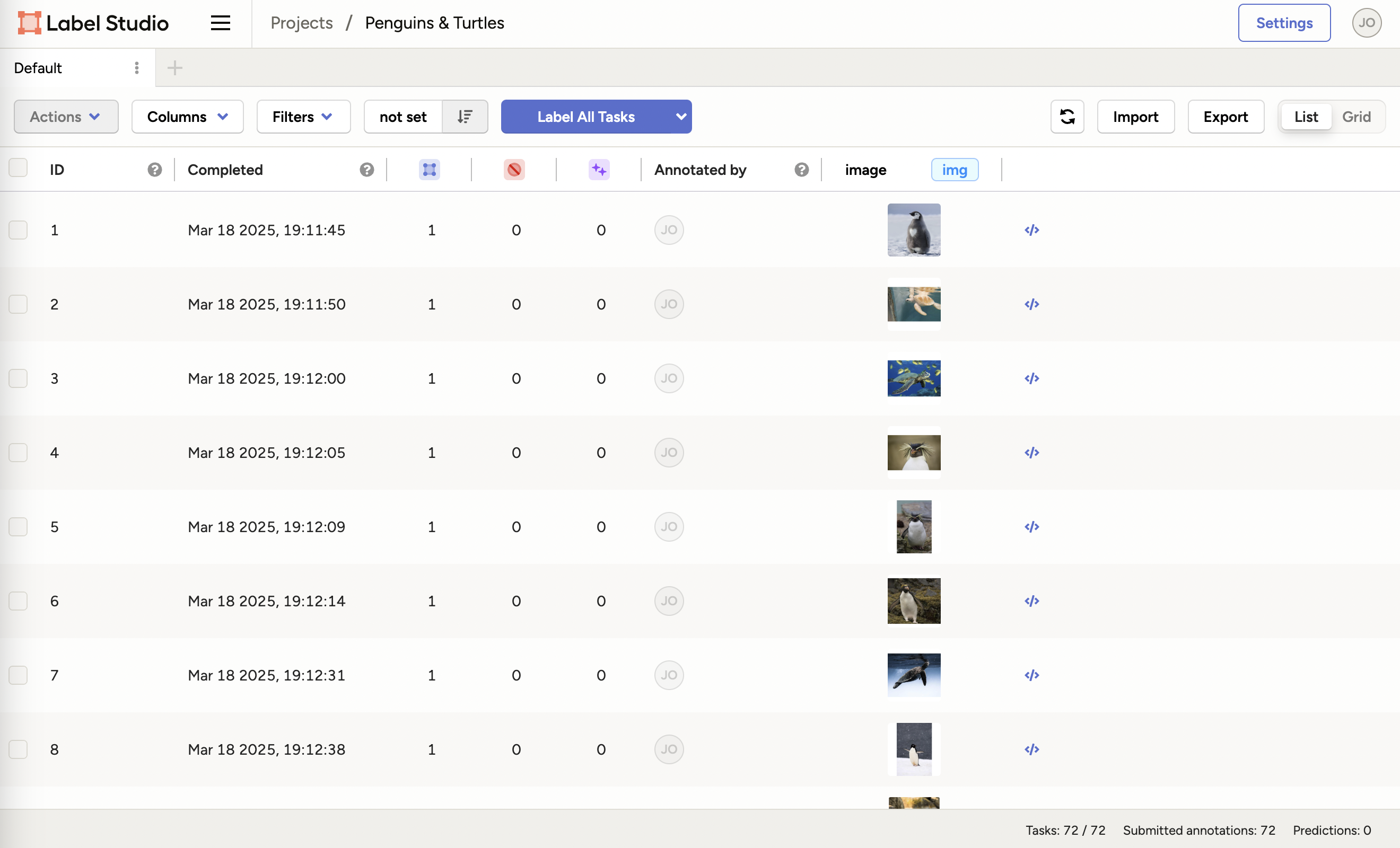

Prepare a dataset

- Now that Label Studio is running, is important to setup a new project and start annotating images.

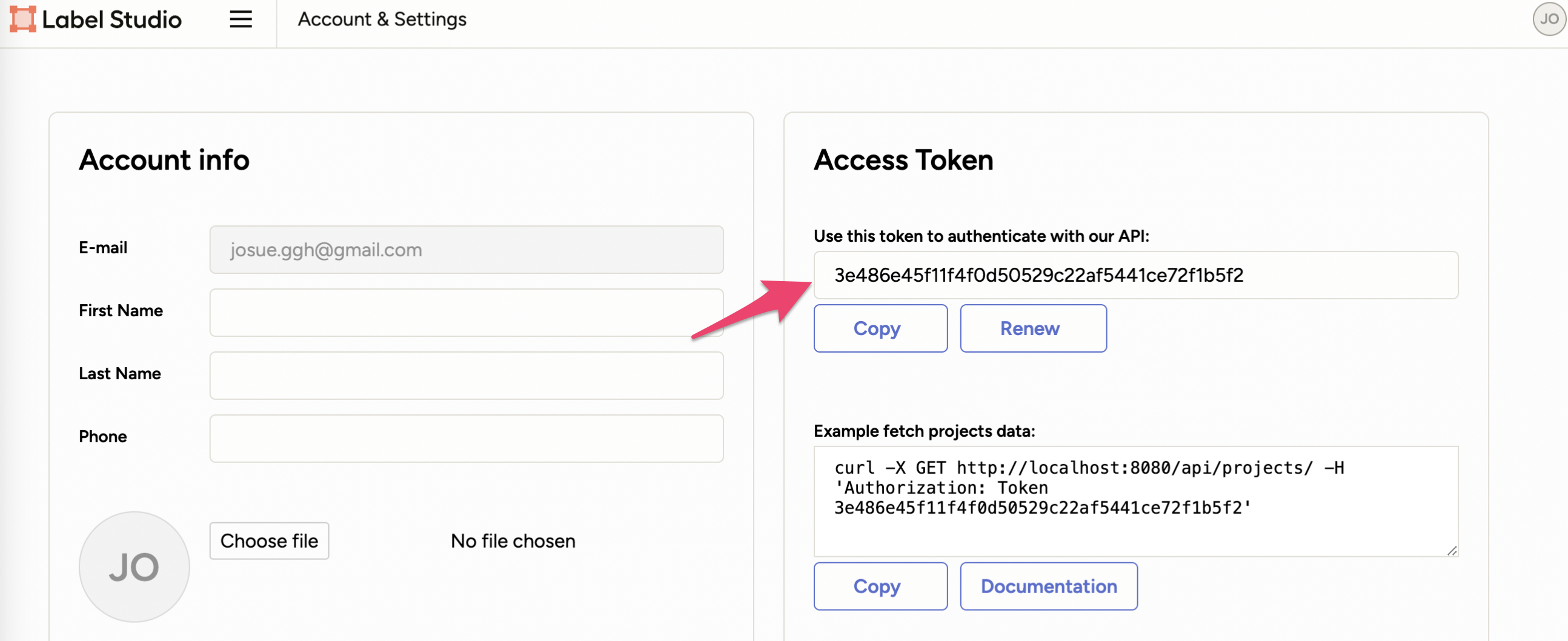

- Retrieve your Label Studio access token from the UI and enter it when prompted.

Connect and Configure

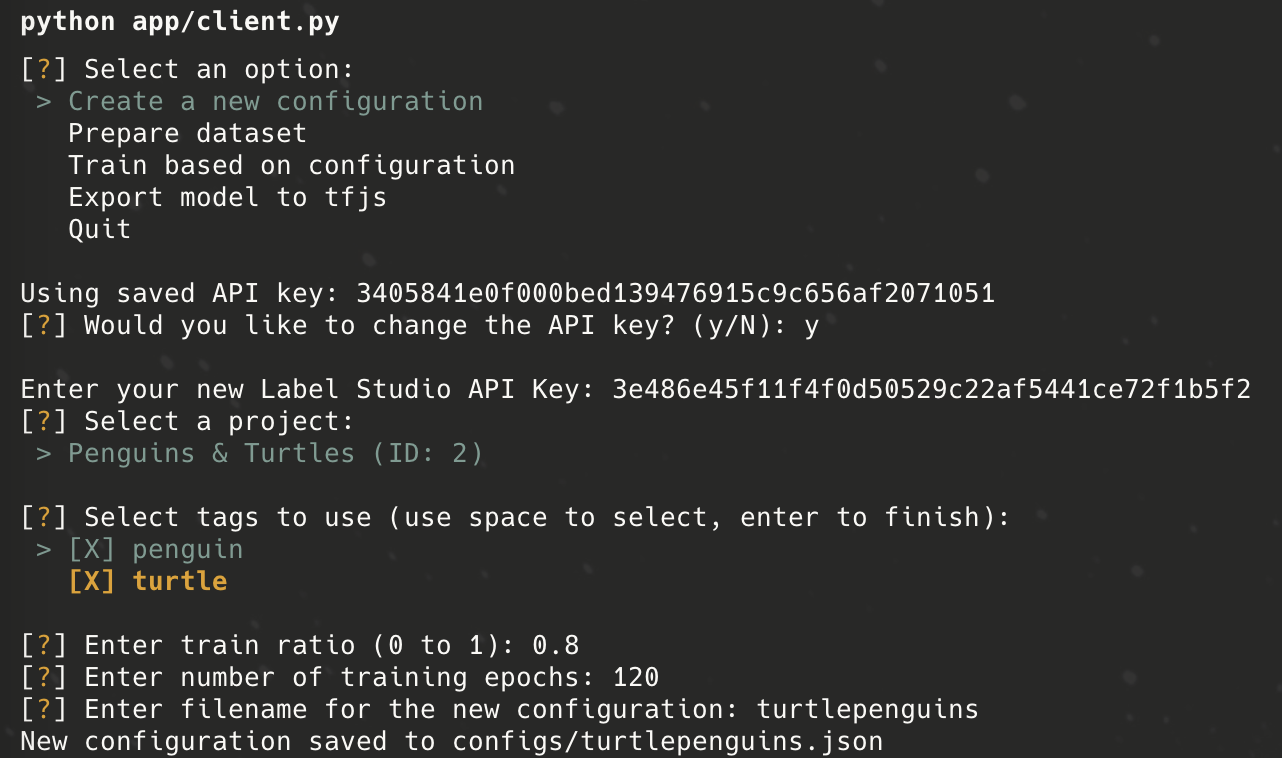

- Run the script:

python app/client.pySelect and Save Your Project

- The script will list available projects.

- Choose the one you want and answer a few setup questions.

- A configuration JSON file will be generated to make your setup reproducible and re-runnable.

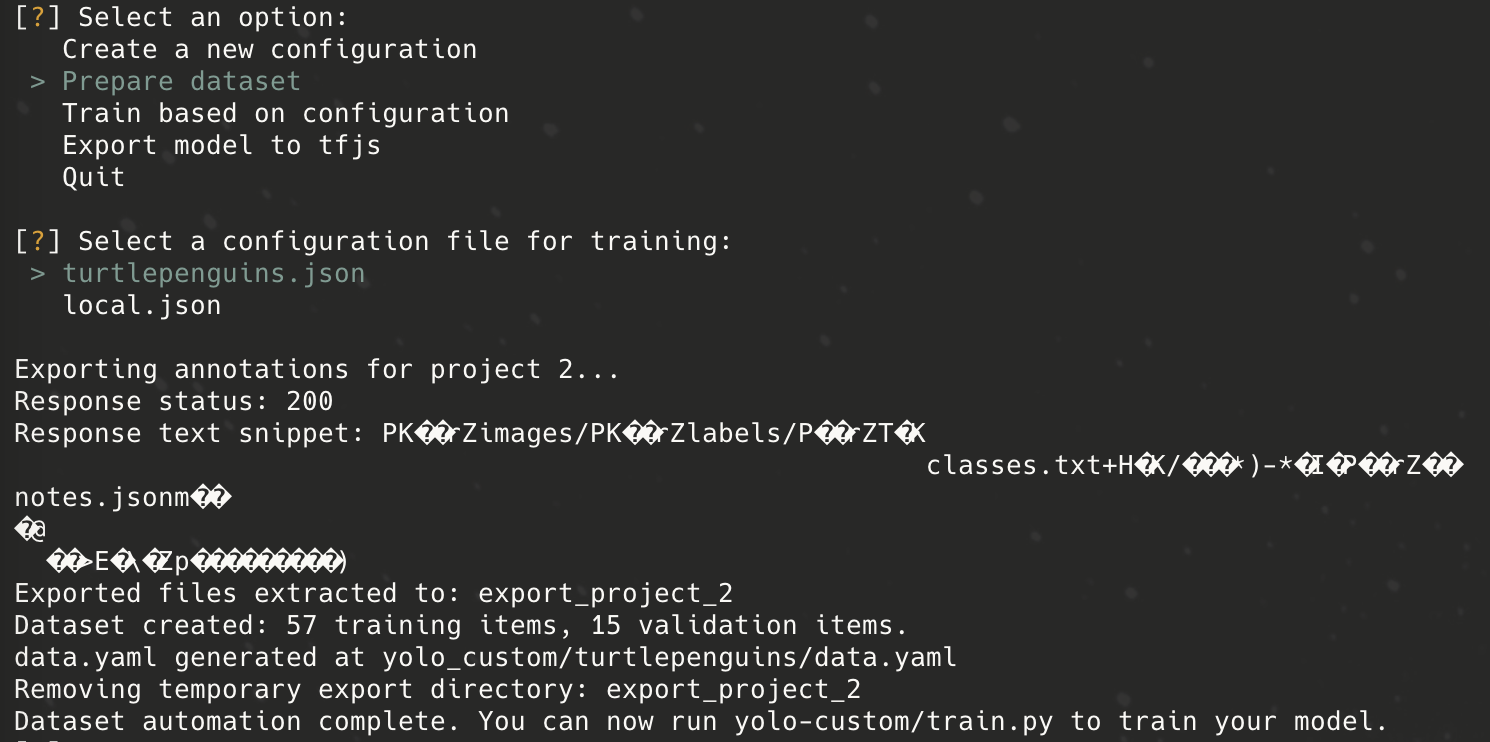

Prepare Your Dataset

- With the configuration in place, choose the “prepare dataset” option to download images and labels from Label Studio in YOLO format.

Train the YOLO Model

- Select “train based on configuration.”

- The training process will begin using the parameters and data from the earlier step.

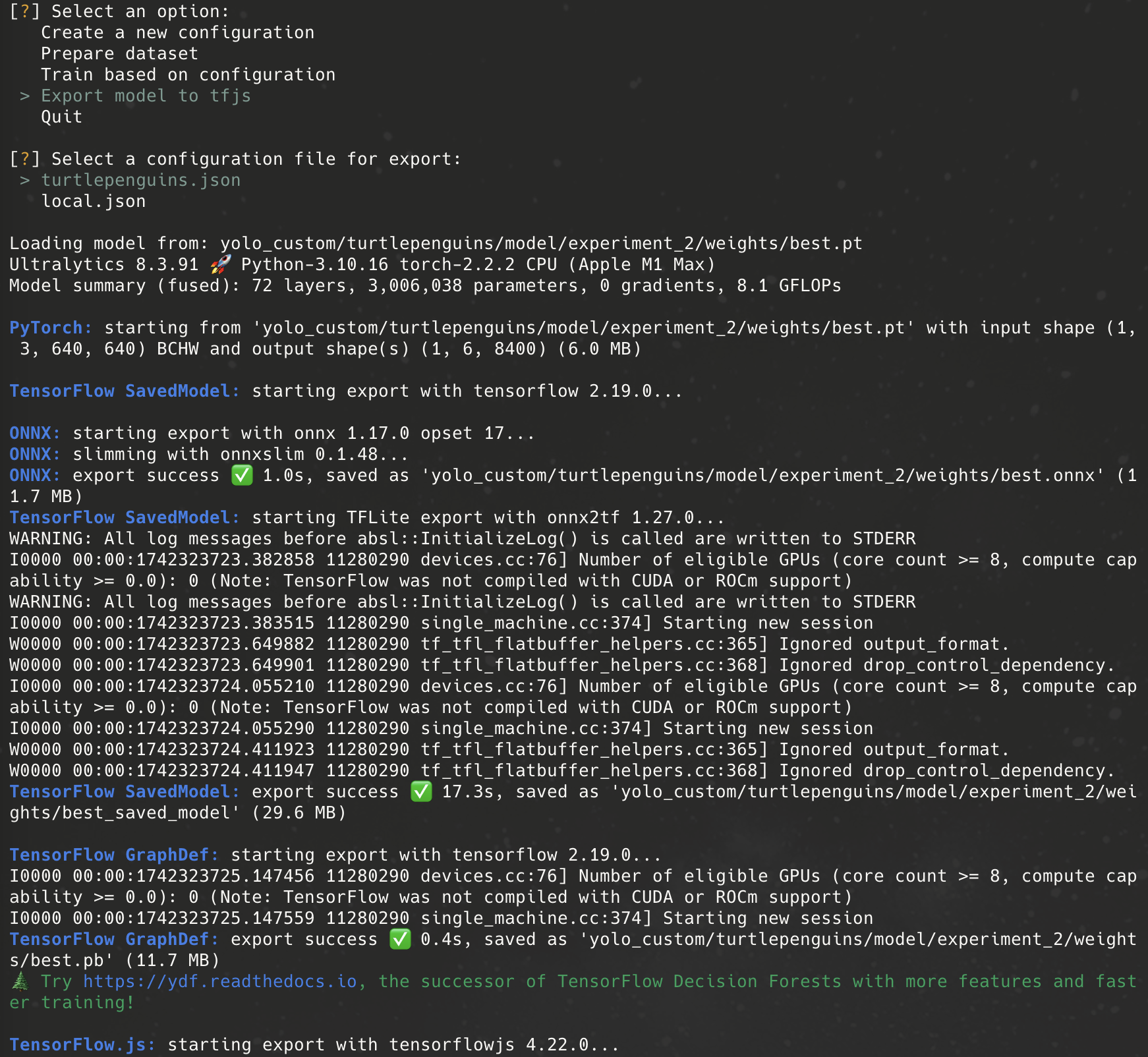

Export for Deployment

- Once training is complete, you can export the model to TensorFlow format (.tflite or .json) for integration into web or mobile apps.

-

Use the generated models with projects like yolo-tfjs to run everything client-side.

-

To watch the video process, you can check the following video:

Conclusion

By integrating Label Studio, YOLO training, and multi-platform model export, this solution democratizes custom object detection. It offers a reproducible and automated workflow that simplifies the process of creating tailored models for diverse deployment targets.