Learning a new language always comes with its own set of challenges. One of the very first steps is building vocabulary. Knowing new words helps us understand and describe the world around us. Over time, we begin forming simple sentences, and eventually, we gain the ability to communicate — even if imperfectly. In the beginning, learning vocabulary is essential to express our ideas and needs.

About the Language

For those of us living in Latin America, German is not a language we often hear or come into contact with. The Netflix series Dark might be our closest exposure — and even then, the language can feel intimidating.

One of the key aspects to mastering German is understanding and using articles. Articles are crucial elements that help us make sense of what we read, write, and say.

(Translation: In 2006, I did my PhD on autonomous quantum thermodynamic machines...

but German grammar is killing me)

Cloud Translation and Vision API

When learning a language, there are many moments where we need to discover new words. While there are plenty of well-established translators out there, as engineers, we often use existing tools to build solutions tailored to our own needs.

I decided to create a small app that uses my phone to take a picture, analyze it, and help me enrich my German vocabulary. For this, I used the Vision API to analyze the image and retrieve labels, then passed the result to the Cloud Translation API to translate it.

🤓 Let’s get started!

Google Cloud offers two main image recognition services: AutoML Vision and Vision API. Vision API is great because it provides results using predefined labels — meaning you don’t need to train your own models to get started.

Vision API can be used for object detection, product recognition, and text detection in images, among other things. For our project, we’ll create an express endpoint with express-formidable to receive an uploaded image file and process it with both APIs.

const express = require('express');

const formidable = require('express-formidable');

const cors = require('cors');

const PORT = process.env.PORT || 8080;

const app = express();

app.use(formidable());

app.use(cors());

/* Cloud Translation API */

const translateFromImage = async (image) => {

// Vision API processing will go here

};

app.post('/translate', async (req, res) => {

const obj = await translateFromImage(req.files.data.path);

return res.status(200).json(obj);

});

app.listen(PORT, () => console.log(`App listening on port ${PORT}`));Next, install the @google-cloud/vision library and define your PROJECT_ID associated with your Google Cloud project. Note that you must enable billing to use these APIs. More info in the official documentation.

const vision = require('@google-cloud/vision');

const PROJECT_ID = process.env.projectID || 'map-visualisations';Now we can implement the translateFromImage function. This will call the Vision API and extract the top label from the image.

const translateFromImage = async (image) => {

const client = new vision.ImageAnnotatorClient();

const [result] = await client.labelDetection(image);

return result.labelAnnotations[0].description;

};Once we have the label, we can translate it using Cloud Translation. Add the @google-cloud/translate library:

const { TranslationServiceClient } = require('@google-cloud/translate');Then update translateFromImage to perform the actual translation:

const translateFromImage = async (image, source = 'en', target = 'de') => {

const client = new vision.ImageAnnotatorClient();

const translationClient = new TranslationServiceClient();

const [result] = await client.labelDetection(image);

const request = {

parent: `projects/${PROJECT_ID}/locations/global`,

contents: [`the ${result.labelAnnotations[0].description}`],

mimeType: 'text/plain',

sourceLanguageCode: source,

targetLanguageCode: target,

};

const [response] = await translationClient.translateText(request);

const text = response.translations[0].translatedText;

const [article, substantive] = text.split(' ');

return { article, substantive };

};Done! Now we have a simple backend service that uses Vision API and Cloud Translation to analyze and translate images into German. Check out the full source code on GitHub 👈.

Frontend with expo.io

My good friend Uriel Miranda introduced me to expo.io — a framework for building apps using React Native. It makes working with hardware components like the camera super easy. You can check out the camera module documentation here.

To see the full mobile app code, visit the repo artikel_aus_ein_bild on GitHub.

Here’s the code responsible for sending the photo to our backend:

const URL = 'YOUR_ENDPOINT_HERE';

async function translate(imageURI: string) {

const formData = new FormData();

formData.append('data', {

uri: imageURI,

name: 'photo',

type: 'image/jpg',

});

return await fetch(URL + '/translate', {

method: 'POST',

body: formData,

}).then(async (response) => response.json());

}

export default {

translate,

};And to highlight the article in the translated word:

<TouchableWithoutFeedback onPressIn={reset}>

<Text style={styles.resultTranslation}>

<Text style={{ backgroundColor: articleColors[state.article].color }}>

{state.article}

</Text>

{state.substantive}

</Text>

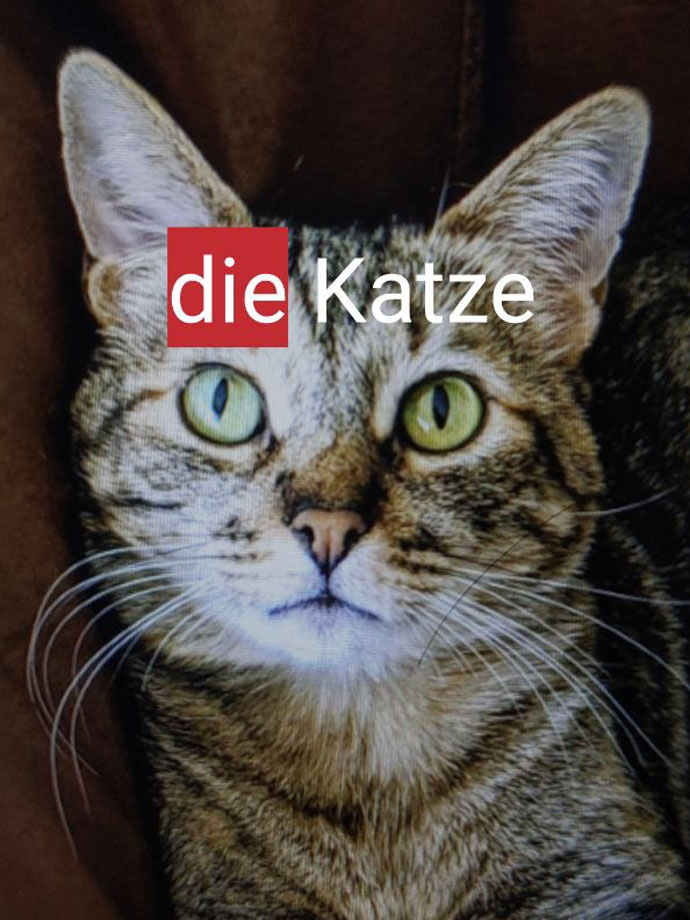

</TouchableWithoutFeedback>Here’s what the final result looks like:

Result with articles

Final Thoughts

Building this app has helped me improve my German vocabulary just by exploring the world around me. It also helped me better understand powerful services like Vision API and Cloud Translation from Google. While these services require a paid plan, they provide developers and companies with robust tools to turn ideas into real-world products.

I plan to keep improving the app — and if you’d like to contribute, feel free to open a Pull Request.

Here’s a quick demo of the app in action:

App demo

App demo